Date: Wednesday, June 26, 2024

Work Undertaken Summary

Risks

Time Spent

0.75 TMA03 5hr TMA03 1hr Reading - “What we learned from a year of building with LLM’s” 2.75hr TMA03 0.25 Reading - “phi3 update” 0.75 TMA03 - Main loop diagram

Questions for Tutor

Next work planned

Complete reading section then move on to project work.

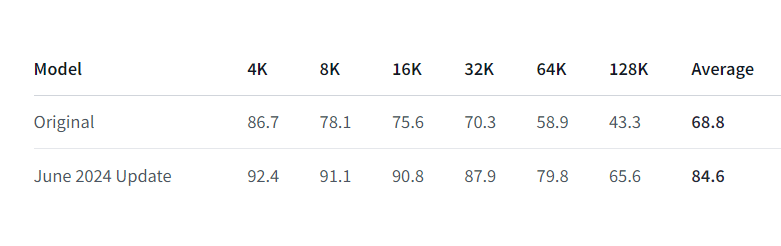

New Dev Work: Try updated phi3-mini with much improved long context scores. Might not get stuck in a loop.

microsoft/Phi-3-mini-128k-instruct · Hugging Face

Raw Notes

StoryWeaver UML

classDiagram

direction RL

namespace GitTrackedClasses {

class IndexFileSection

class DocumentSection

class IndexFile

}

note for DocumentSection "These classes are serialized\n and saved within the directory\n that is tracked in Git."

class IndexFileSection {

+str path

}

class DocumentSection {

+str tag

+str prompt

+str last_read_story

+str content

+str path

}

class IndexFile {

+IndexFileSection[] sections

}

IndexFile o-- "*" IndexFileSection

DocumentSection .. Database

IndexFile .. Database

class Database {

-Path database_dir

-Repo repo

+Database(Path database_dir)

-index_file_path() Path

+read_index_file() IndexFile

+write_index_file(IndexFile index_file)

+read_section(IndexFileSection section) DocumentSection

+write_section(DocumentSection document_section)

+add_section(str tag, str prompt)

+commit(str message)

}

class StoryFile {

+int id

+str name

+str content

+str[] tags

+str file_name

}

note for StoryFile "Representation\n of a single story\n read from the\nfilesystem"

StoryFile .. Model

Message .. BasicModel

class Model {

+combine(StoryFile[] batch,\nDocumentSection section_document)* str

}

Model .. DocumentSection

class DummyModel {

+combine(StoryFile[] batch,\nDocumentSection section_document) str

}

class PromptFile {

str system_prompt

PromptFileExample[] examples

PromptFileExample test

}

class PromptFileExample {

str prompt

str[] batch

str input

str output

}

PromptFile o-- "*" PromptFileExample

BasicModel *-- PromptFile

class BasicModel {

-AutoTokenizer tokenizer

-AutoModelForCausalLM model

-PromptFile prompt

-Path prompt_path

-bool has_system_prompt

_load_model()* void

_run_messages(Message[] messages)* str

+combine(StoryFile[] batch, DocumentSection section_document) str

+BasicModel(Path prompt_path, bool has_system_prompt)

#add_message(Message[] messages, Message message) Message[]

_hydrate_messages() Message[]

-read_prompt() void

_make_example_input(PromptFileExample example) Message

_make_example_output(PromptFileExample example) Message

__make_message(DocumentSection section, StoryFile[] batch) Message

__chat_dict(DocumentSection section, StoryFile[] batch) Message[]

__format_batch(str[] batch) str

__format_message(str prompt, str input_documentation, str[] batch) str

+test() str

+hydrate() Message[]

}

class Message {

+MessageRole role

+str content

}

Message .. MessageRole

class MessageRole {

<<enumeration>>

SYSTEM

USER

ASSISTANT

}

class Llama3Model {

+Llama3Model()

_load_model()* void

_run_messages(Message[] messages) str

}

class Llama3_8B {

_load_model() void

}

class Llama3_70B {

_load_model() void

}

class Phi3Model {

+Phi3Model()

_load_model()* void

_run_messages(Message[] messages) str

}

class Phi3_14B {

_load_model() void

}

class Phi3_7B {

_load_model() void

}

Model <|-- DummyModel

Model <|-- BasicModel

BasicModel <|-- Llama3Model

BasicModel <|-- Phi3Model

Llama3Model <|-- Llama3_8B

Llama3Model <|-- Llama3_70B

Phi3Model <|-- Phi3_14B

Phi3Model <|-- Phi3_7B

flowchart

1.1["`Run Entrypoint`"]

1.2["`Init DB`"]

1.3[Read index file, determine current state of all sections]

1.4[Read all user stories into a mapping from

Section/tag to ordered list of stories to process]

2[Read current section state]

3[Get next batch of stories for section]

3.1[Use LLM to combine current

section with all stories from batch]

3.2[Update last read pointer so

processing resumes from correct place]

3.3[Write new section to disk, commit change to Git]

4{Are more

stories to

process for

this section?}

5.1[Move to next section]

5{Are more

sections

to process?}

6[End]

1.1 --> 1.2 --> 1.3 --> 1.4 --> 2 --> 3

3 --> 3.1 --> 3.2 --> 3.3 --> 4

4 -->|yes|3

4 -->|no|5

5 -->|no|6

5 -->|yes|5.1-->2